What if your BI tool could both explain and fix problems in real time?

In our latest Practical BI episode, we sat down with Piers Batchelor, Senior Product Manager at Astrato and a Snowflake Data Superhero, to explore how Snowflake’s advanced features enable exactly that.

This article distills Piers’s insights on Cortex, Snowpark, automatic clustering, writeback, and external functions, showing how we can move from static dashboards to interactive data apps.

Why we’re leaving batch BI behind for live-query data apps

Before we explore Snowflake’s game-changing features, let’s pause on why the traditional BI model no longer cuts it – and how modern data apps close the gap.

In legacy BI workflows, we constantly:

- juggle nightly extract loads and sync jobs that frequently fail

- switch between siloed tools to analyze data, take action, and enforce security

- question whether our reports reflect yesterday’s snapshot or real-time truth

Cloud-native, live-query architectures solve these pain points by:

- querying a single source of truth with governance enforced centrally

- eliminating reloads, manual syncs, and hidden security gaps

- delivering up-to-the-second data every time

This shift is driven by exploding data volumes, growing demands for AI-powered insights, and the need to act instantly on changing conditions. With those fundamentals in place, let’s define what really makes a data app – and why it’s so much more than a dashboard.

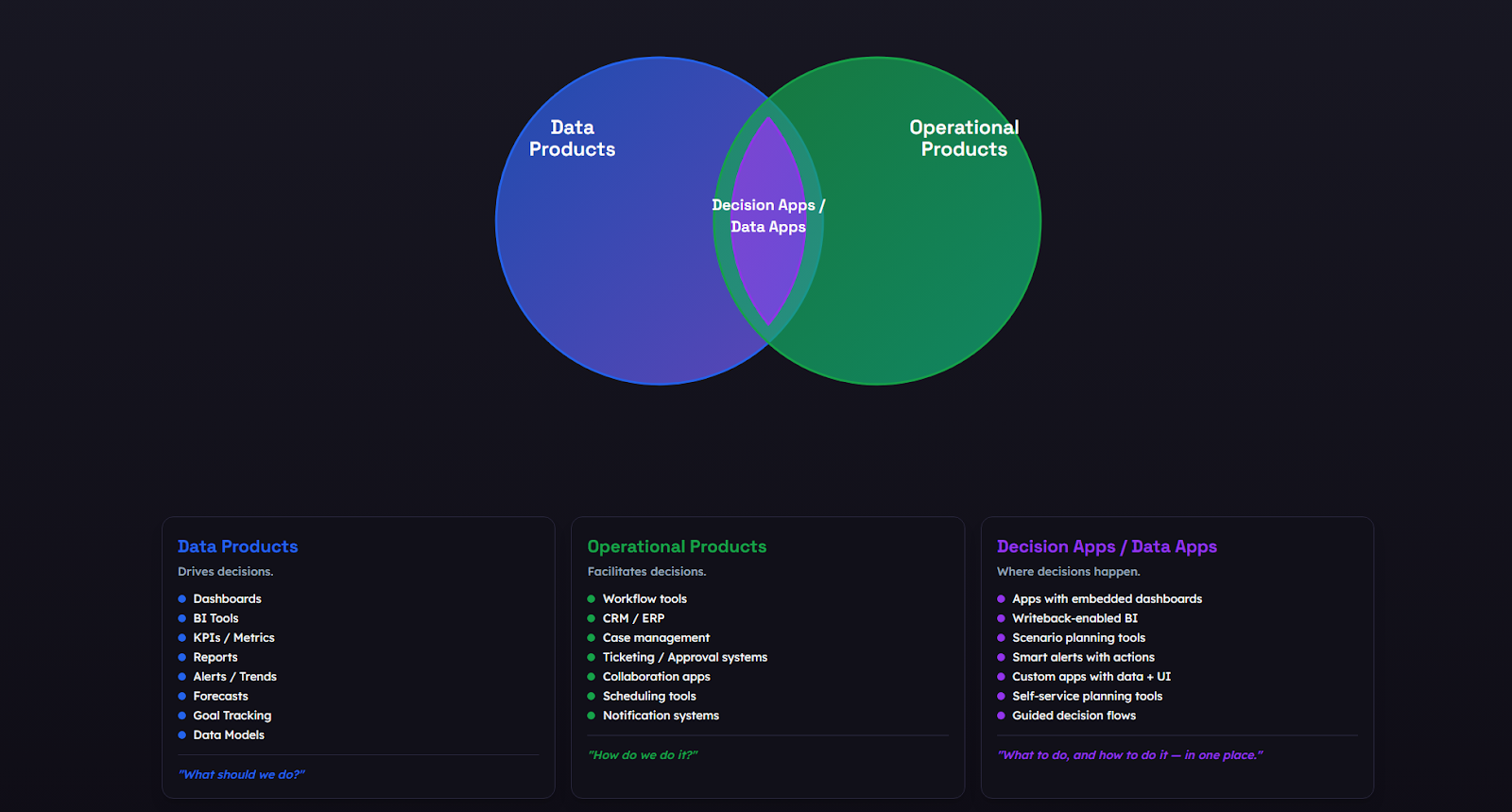

What makes a data app?

Businesses need more than static reports that update hourly or nightly. Product, finance, and success teams demand on-the-fly adjustments – and waiting for IT or BI tickets just isn’t an option.

But first, what a data app is not

- a passive snapshot with fixed refresh intervals

- a read-only view that leaves you switching tools to act

- a siloed dashboard that lives apart from your workflows

And what a data app really is

- it answers “what happened” and “why” with live queries and AI, not stale charts

- it enables “what’s next” and “let’s do it” via embedded writeback, approvals, or alerts

- it integrates seamlessly with downstream systems – CRM, planning, ticketing – so action is a click, not a handoff

Imagine a support lead drilling into real-time ticket sentiment and, without leaving the app, creating a priority task for the engineering team. That’s a daily workflow embedded into a data application – super intuitive, convenient and happening in real time.

So, essentially, data apps collapse analysis and execution into one pane of glass, so teams never lose time toggling tools.

With that clarity in place, let’s dive into the 5 Snowflake ❄️ features that power these next-generation data apps.

Top 5 Snowflake features, explained

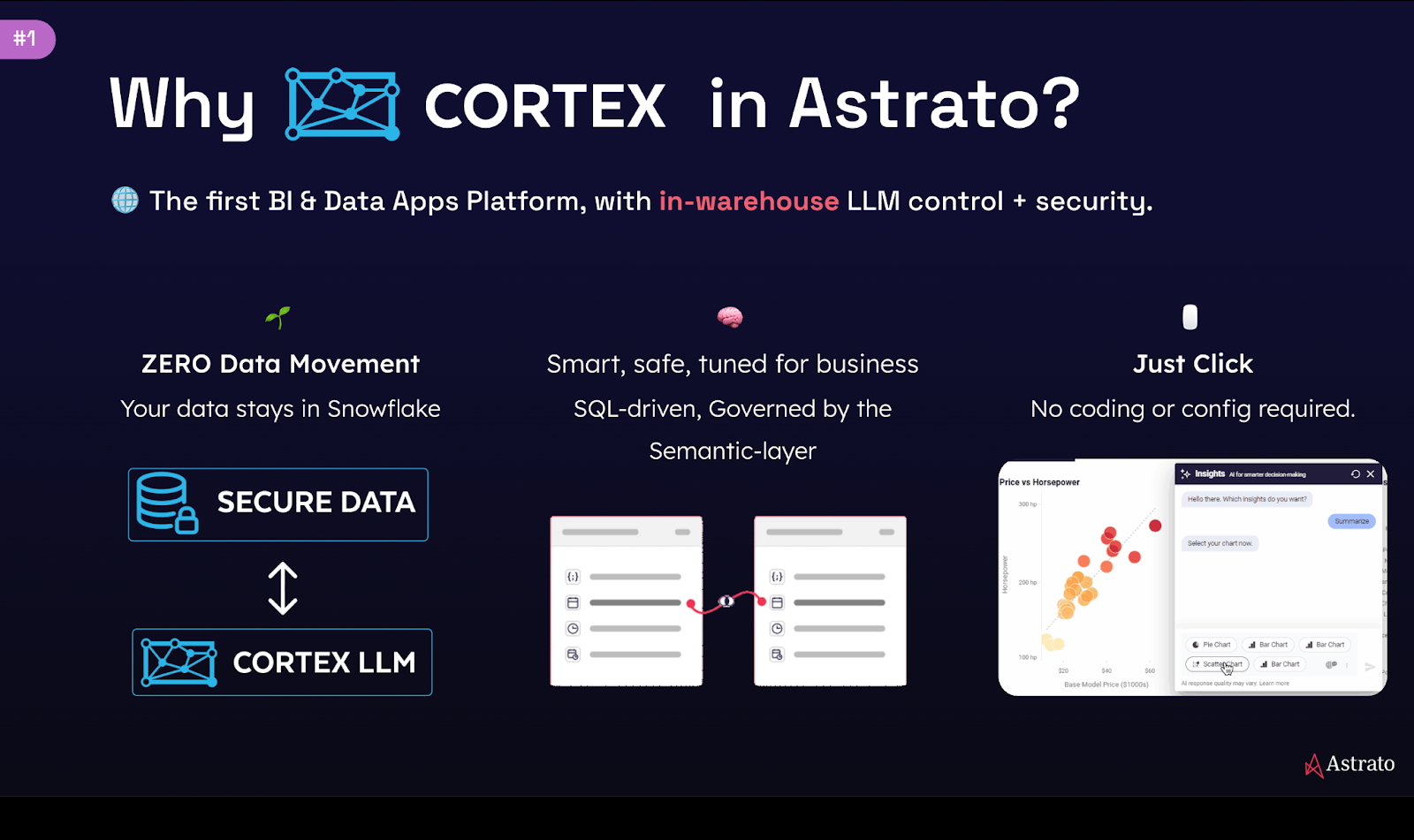

#1 Snowflake Cortex: secure AI inside your warehouse

Cortex is a sealed cleanroom for AI. It allows you to get smart, governed insights without data leaks or SQL errors.

What you can achieve with Snowflake Cortex:

- plug-and-play AI: run powerful LLMs in-warehouse with no setup or data movement

- semantic-layer integration: leverage your curated tables, views, and business metrics so text-to-SQL queries never guess at joins or column names

- custom models: swap in fine-tuned LLMs or prompt templates for domain-specific language

Code snippet:

SELECT SNOWFLAKE.CORTEX.SENTIMENT

(REVIEWS);

SELECT SNOWFLAKE.CORTEX.ENTITY_SENTIMENT

(REVIEWS, ['Teamworking', 'Time-keeping' ,'Work ethic']);

{ "categories": [

{ "name": "Teamworking",

"sentiment": "unknown"},

{ "name": "Time-keeping",

"sentiment": "neutral"},

{ "name": "Work ethic",

"sentiment": "positive"}

]}

How Astrato enhances Cortex:

- prompt enrichment: we inject table and column descriptions so the model “knows” your schema

- click-to-run suggestions: AI proposes SQL snippets based on context, and you approve before execution

- pipeline-free AI: insights run on live data – no external ETL or maintenance

Want to try it out? Follow these best practices:

- prepare and secure your semantic layer before enabling Cortex – document tables, views, and metrics and apply role-based access controls

- monitor AI usage via ACCOUNT_USAGE.QUERY_HISTORY to track function calls, cost, and performance

#2 Snowpark: bring your code to the data

Snowpark is Snowflake’s developer framework that lets you run Python, Java, or Scala workloads directly inside your warehouse.

Instead of exporting data to external clusters, Snowpark executes User-Defined Functions (UDFs) and stored procedures where the data lives, unlocking real-time analytics and custom logic on fresh data.

What you can achieve with Snowflake Snowpark:

- in-warehouse execution: run Python, Java, or Scala UDFs and stored procedures without moving data

- custom analytics: embed risk scoring, text summarization, or domain logic directly into queries

- real-time results: every filter, calculation, or what-if scenario computes on the freshest data

How Astrato enhances Snowpark:

- prebuilt templates: Astrato provides starter UDFs and pipelines for common use cases (churn, forecasting, anomaly detection)

- integrated debugging: inline logs and Snowflake Worksheets simplify function troubleshooting

- automated deployment: CI/CD scripts sync Snowpark code from Git into Snowflake stages on every commit

Want to try it out? Follow these best practices:

- keep UDFs focused and leverage vectorized operations to maximize performance

- monitor compute usage with resource monitors and set alerts for unexpected spikes

- version-control your code in Git and tie deployments to Snowflake stages for reproducibility

Snowpark is your workshop on the factory floor – code runs where the data lives, so teams iterate on models and logic instantly, without spinning up external clusters.

#3 Automatic clustering: prune partitions for consistent sub-second performance

Snowflake’s automatic clustering continuously reorganizes micro-partitions behind the scenes as new data arrives. Instead of scanning every file, queries only read the partitions that matter – delivering predictable, sub-second response times even on tables with billions of rows.

How it works:

- micro-partition reorganization: as data lands, Snowflake reclusters partitions along your specified key

- dynamic pruning: each query skips irrelevant partitions, touching only the data slices needed

- self-tuning: no manual index rebuilds or vacuum jobs – Snowflake continuously optimizes in the background

And here’s why that matters:

- consistent speed at scale

Queries against a 1 billion-row table that once took 4 seconds can run in 0.3 seconds, without upsizing the warehouse. - cost efficiency

By pruning away 90–95 percent of unused partitions, you pay only for the compute you actually use. - governed performance

Clustering respects row-level security and masking policies, so every user gets fast, compliant results.

How Astrato leverages automatic clustering:

- query-log analysis

Astrato reviews historical filter patterns to recommend optimal clustering keys. - monitoring and alerts

We track SYSTEM$CLUSTERING_INFORMATION to detect when reclustering lag exceeds thresholds. - adaptive key tuning

For evolving workloads, Astrato can adjust clustering keys as query patterns shift.

Want to give it a try? Follow these best practices:

- align keys with common filters

Choose clustering columns that match your most frequent WHERE clauses. - avoid high-cardinality traps

Don’t cluster on overly unique columns (user IDs, GUIDs) that fragment partitions. - validate regularly

Automatic clustering is like a GPS routing only the fastest streets – your queries skip the back alleys.

#4 Writeback: enable real-time edits and workflows from your dashboards

Astrato’s writeback feature lets users update Snowflake tables directly from their BI interface – then uses Snowflake’s compute and API integration features to trigger downstream processes without leaving the dashboard.

Apply changes and kick off workflows instantly:

- direct Snowflake writes: push edits from your data app into Snowflake tables in real time

- scenario planning & approvals: adjust budgets or operational parameters and store results immediately

- trigger downstream logic: combine with Snowpark procedures or external functions to recalculate metrics, send notifications, or update third-party systems

How Astrato leverages Snowflake for writeback:

- audit-ready schemas: Astrato scaffolds tables with modified_by, modified_at, and operation_type columns

- secure access: writeback interfaces use secure views and grants to limit who can update which fields

- end-to-end workflows: after writeback, Snowpark recalculates dependent logic and external functions sync changes to CRM or notification tools

Want to give it a try? Follow these best practices:

- track every change with audit columns and history tables to support governance and rollback

- validate inputs using Snowpark procedures or database constraints before committing data

- restrict write permissions via secure views and role-based grants to prevent unauthorized edits

Writeback bridges insight and action, so users can edit data and trigger live workflows, all without leaving their dashboard.

4.5 External functions: orchestrate cross-system workflows from SQL

External functions transform Snowflake from a read-only warehouse into the control plane for your entire data ecosystem – calling out to APIs, triggering processes, and keeping every system in lockstep.

Invoke APIs directly from SQL:

- API integration: call services like DocuSign, HubSpot, Salesforce, planning or scheduling tools right from Snowflake

- automated actions: send notifications, kick off approval workflows, update CRM records, or post to messaging channels

- no glue code: eliminate ETL scripts, middleware, or separate integration platforms – your SQL is the orchestrator

How Astrato amplifies external functions:

- prebuilt connectors: Astrato provides ready-to-use adapters for common systems, reducing setup time to minutes

- secure key management: API credentials and secrets are stored and rotated automatically in Snowflake’s external function integration

- chained workflows: define multi-step actions (writeback → Snowpark processing → external calls) with a single click in your data app

Want to give it a try? Follow these best practices:

- lock down credentials: store API keys in Snowflake secrets or vault, and grant external function permissions only to necessary roles

- implement retry and backoff: wrap calls in Snowpark procedures or use built-in retry settings to handle transient network errors

- audit every call: log function invocations and responses in metadata tables to ensure observability and simplify troubleshooting

External functions turn Snowflake into your workflow engine, so you can manage, automate, and sync every system with a single SQL statement.

Next steps: start building your first data app

Unlock real-time insights and workflows today! No more waiting on batch jobs or handoffs. Astrato marries Snowflake’s AI, compute, and integration features into turnkey data apps that are fast, secure, and fully governed.

Your quick-start checklist:

✅ Define your semantic layer before enabling AI workloads so every Cortex query respects your tables, views, and metrics

✅Modularize and version Snowpark code for faster iteration, easier debugging, and reliable rollbacks

✅Choose clustering keys that match query patterns to maintain sub-second performance at scale

✅Audit all writebacks and external function calls to ensure governance, traceability, and compliance

⚡ Take action now:

- explore our demo gallery to see live data apps in action

- dive into the Astrato docs for step-by-step setup guides

- sign up for a free trial and launch your first data app in minutes

Your next-gen data app awaits!

.avif)